Designing EMTouch: an app for emotion identification training

Role: Founder & Sole Developer

Duration: August 2022 – March 2024

App Store Link

Overview

EMTouch is an app that trains users to identify the emotions behind common facial expressions, with the goal of facilitating communication between neurodivergent and neurotypical individuals. The app has been used in different medical institutions and behavioral therapy organizations.

Problem

There exists a disconnection between neurodivergent and neurotypical individuals in society because our brains are wired differently, resulting in a lack of communication between the two. Rather than attempting to change neurodivergent thinking patterns to conform to the social norm, due to exceptional performance of numerous neurodivergent minds, it is more effective for the two to work together. In a TED Talk, Temple Grandin argued that the world needs all kinds of minds, both neurodivergent and neurotypical.

However, to increase collaboration, it is crucial for each side to understand the basic communication techniques of the other side. One such aspect is the expression of simple emotions.

The goal of EMTouch is to teach neurodivergent individuals, such as those on the autism spectrum, how to read the emotions of neurotypical individuals. Future improvements include working with autistic people to learn how they express themselves in order to teach neurotypical individuals.

The Process

1) Research and Consultations

In order to develop an app that can accurately teach neurodivergent individuals who have trouble recognizing facial expressions to recognize emotions, I consulted various people for advice, including Professor Chiun Li Chin at Chung Shan Medical University and anonymous autistic friends.

The following are the key points I determined from both consultations and my own research online:

- It is indeed possible for neurodivergent, specifically autistic, individuals to analyze and recognize facial expressions

- Emotion recognition through machine learning has been well researched in the field of computer science

- There are few or no accessible, simple way to learn neurotypical skills. Most solutions involve long hours at behavioral therapy

2) Initial: Ideating Solutions to Pain Points

Based on the pain points, I created a design of an app aiming to solve the problem of inconvenience and to assist the neurodivergent with emotion recognition.

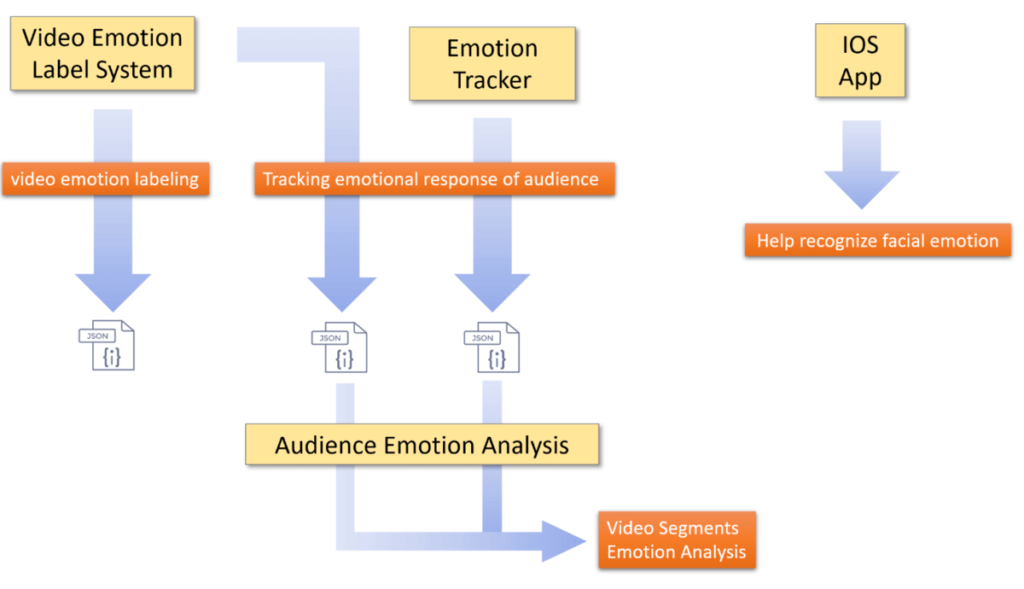

System Architecture

Version 1 Components

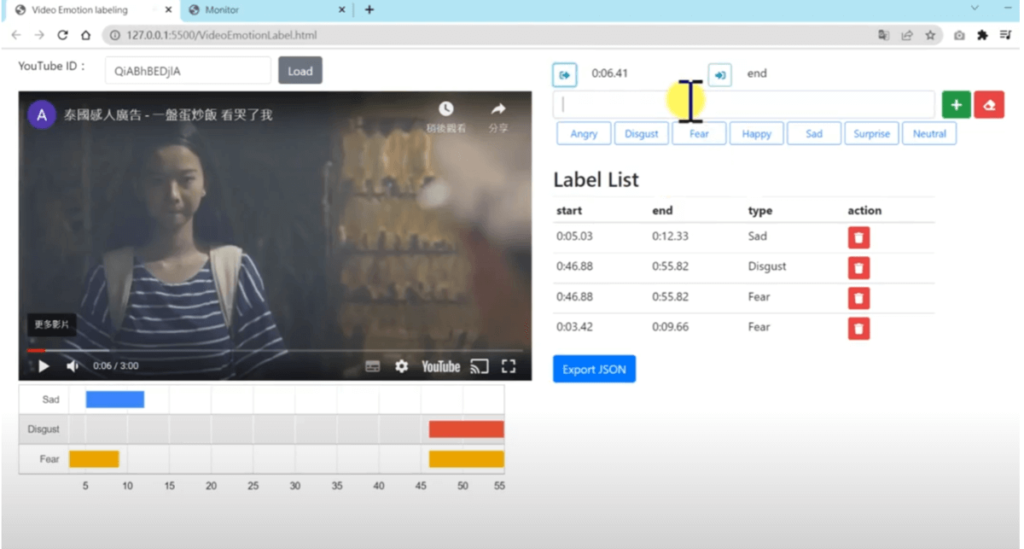

The original idea was to use a video emotion labeling system to label the “right” reactions to specific parts of the video. A sad scene should be met with a sad expression, while a funny one should be met with a happy expression.

Video Emotion Label System

- A tool platform that can perform emotional tagging for online video clips.

- languages:javascript、css、html

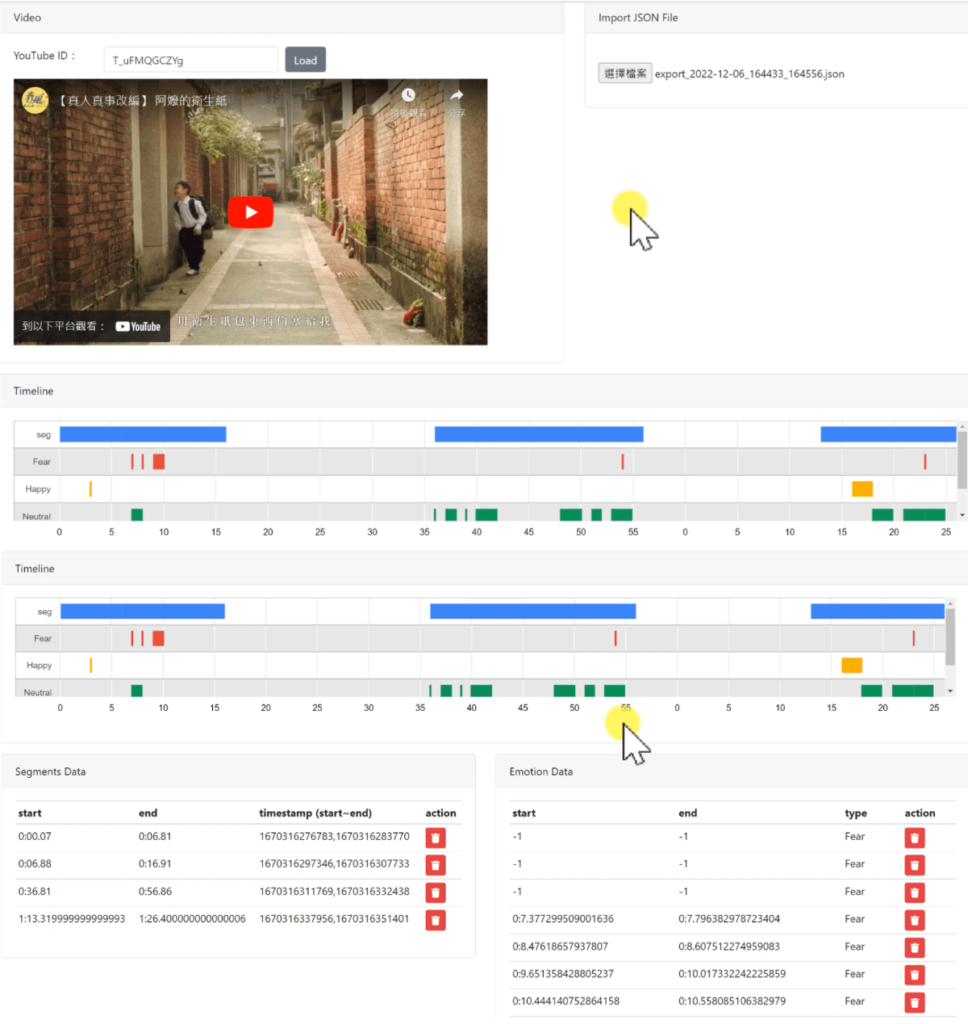

Emotion Tracker

- This is a real-time emotion recognition system. The system captures faces in the environment through a camera and performs emotional recognition. The recognition results can be output as a json format file.

- FER-2013(https://www.kaggle.com/datasets/msambare/fer2013) is used as the training data source to construct a emotion recognition model using CNN.

- language:python

Audience Emotion Analysis

- Aggregate the information generated by modules A (Video Emotion Labele System) and B (Emotion Tracker), and generate analytical information on video segments corresponding to user responses.

Version 1 Feedback

The labeling of videos was found to be extremely time-consuming and subjective. Furthermore, there was no space for improvements on the complexity of emotions because of how subjective the “right” video reactions were.

Version 2 Components

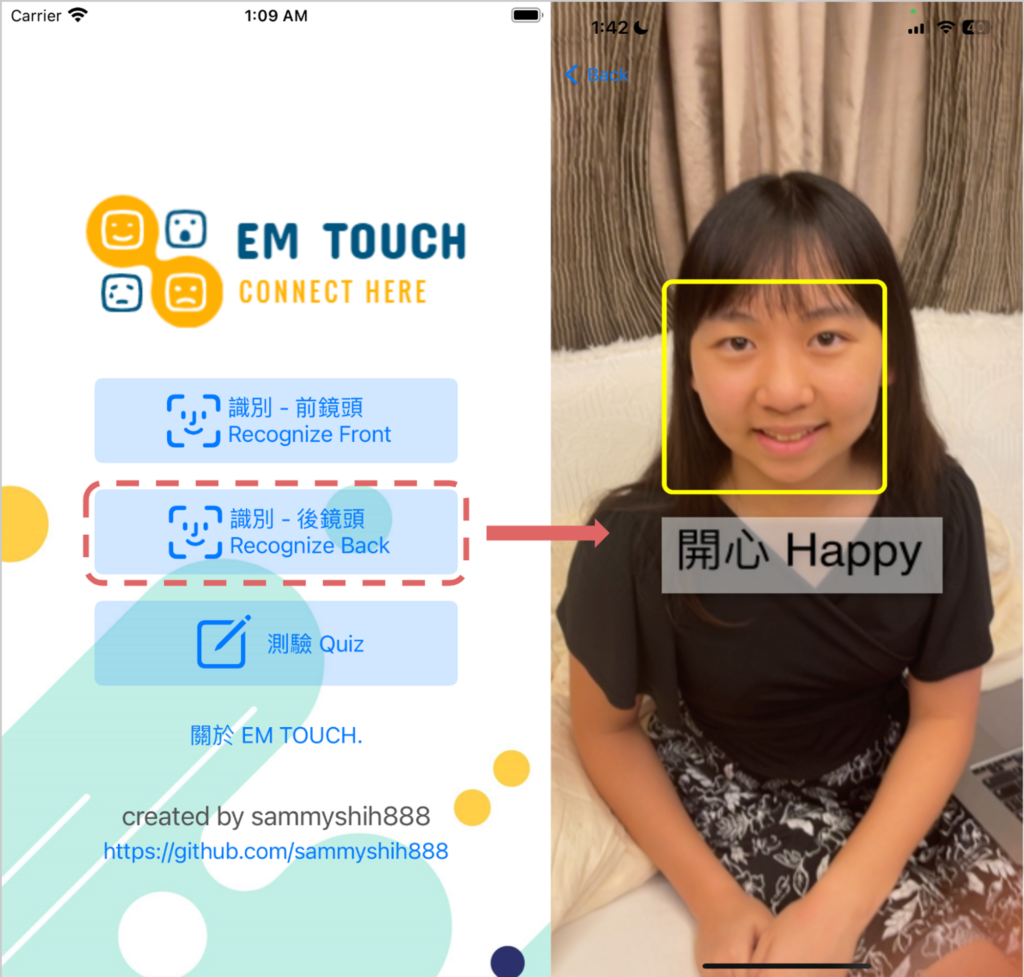

This version was mostly an attempt to create an app to help the user improve social interaction through both expression and recognition of emotions by use of a machine learning model trained to recognize emotions. The user could make facial expressions, and the phone would indicate the expression it is detecting. The idea was for autistic individuals to play around with their facial emotions in order to achieve the facial expressions they want to make.

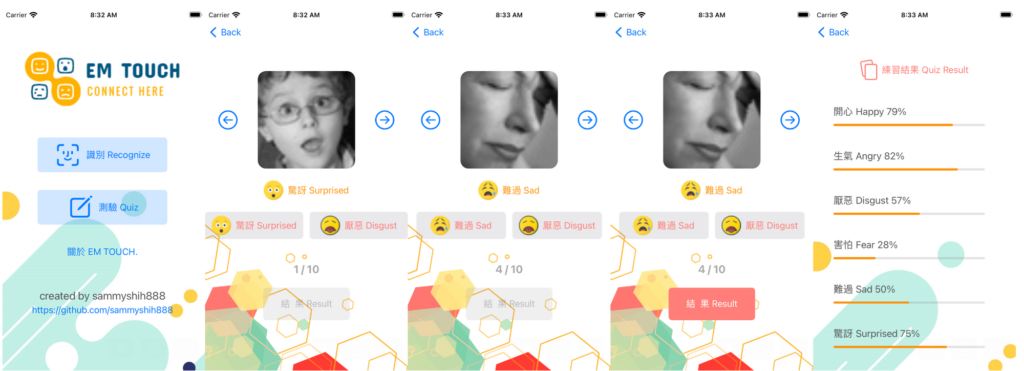

Additionally, a simple quiz feature was added to the app to test the expression recognition abilities of the user.

An IOS app was created (one of the past versions of https://apps.apple.com/tw/app/em-touch/id6448122198, no longer able to download)

Version 2.2

One update was that instead of just the back camera, a front camera feature was added to make the app easier to use.

Additional Consultations and Feedback

Aside from low accuracy when judging live emotions, one key piece of feedback received from Physical Medicine and Rehabilitation Physician Junhua Zhou was that giving autistic individuals this tool would not really help them. Instead of learning to make and recognize expressions, autistic individuals would instead use the app as a tool to recognize expressions for them. The action of holding a camera to a person’s face would likely raise concerns and may not be socially acceptable. Additionally, Dr. Zhou thought that the quiz feature was not interactive enough. The suggestion was to focus on a more interactive way to teach autistic individuals facial expressions.

Version 3 Components

The third component, the quiz, was changed into a more interactive game to help test a user’s ability to recognize the correct facial expressions. A limited time frame per game, as well as a simple score and leaderboard system, were implemented to add competition and excitement to the game.